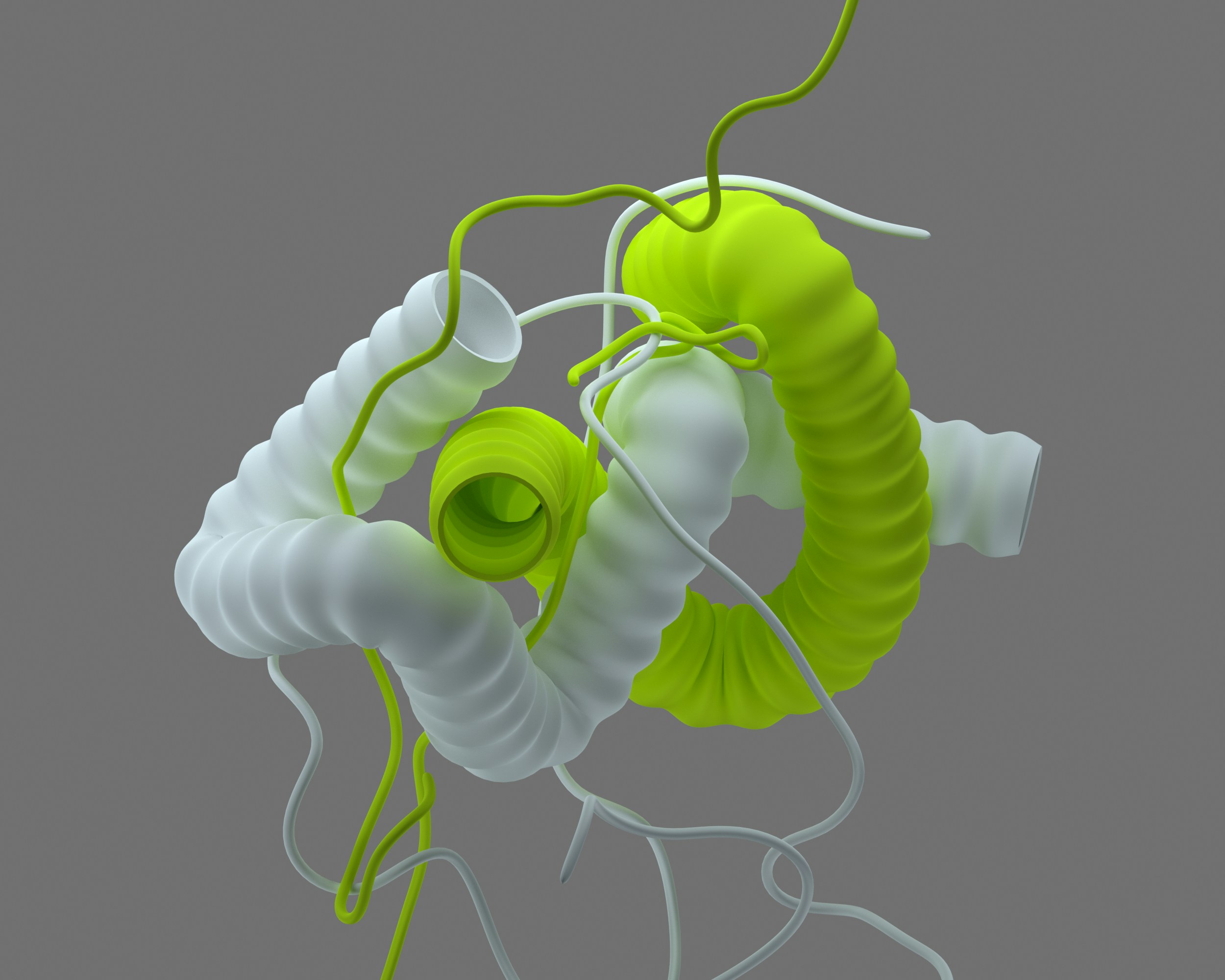

MegaDock Overview

MegaDock 4.0 is a high-throughput protein-protein docking software designed for large-scale virtual screening applications. It employs a fast Fourier transform (FFT)–based docking method that allows it to leverage GPU acceleration, setting it apart from traditional CPU-based docking tools. This GPU utilization makes MegaDock well-suited for massive protein-protein docking workloads with its large-scale docking simulations per protein-protein interaction, such as in high-throughput virtual screening use cases for drug discovery.

The scale of protein-protein docking simulations varies based on the use case, computational resources, and docking methodology. Academic research and small-scale studies typically conduct 1,000 to 100,000 docking simulations per protein pair, focusing on select candidate complexes with tools like HADDOCK and RosettaDock. High-throughput docking (HTD) for drug discovery and AI-driven approaches to scale up to 100,000 to 10 million simulations per protein-protein interaction, leveraging HPC resources for virtual screening. At the most significant scale, systematic studies and whole-proteome docking can exceed 10 million to 1 billion simulations, often integrating AI/ML models for prioritizing high-confidence interactions.

Despite its advantages, running MegaDock at scale presents several challenges, especially when deployed on cloud GPUs. GPUs are among the most in-demand resources in the cloud, making them difficult to procure, expensive to use, and challenging to scale efficiently. Due to high demand from AI, high-performance computing (HPC), and other compute-intensive workloads, cloud providers often face GPU shortages, leading to limited availability and long provisioning times. Scaling up GPU resources dynamically can be unpredictable, as instances may not be readily available when needed in any particular cloud region and availability zone. The computational intensity of large-scale docking workloads, the high cost of GPU utilization, the necessity for efficient job orchestration to minimize idle time and data transfer delays, and managing large-scale output data—often exceeding 1TB in size—can slow down post-processing and analysis. These challenges necessitate an intelligent orchestration framework to optimize performance while reducing costs. This is where Fovus, an AI-powered, serverless HPC platform, delivers significant improvements.

Introduction to Fovus

Fovus is an AI-powered, serverless high-performance computing (HPC) platform designed to enhance performance, scalability, and cost efficiency for complex workloads like MegaDock. By automating cloud logistics and optimizing HPC strategies, Fovus ensures sustained time-cost optimality amid evolving cloud infrastructures. The platform provides automated benchmarking to evaluate instance configurations, AI-driven optimization to ensure efficient computation, and multi-cloud auto-scaling to distribute workloads across regions dynamically. By leveraging spot instances with failover capabilities intelligently, Fovus further reduces costs while continuously adapting to evolving cloud infrastructure. Its serverless model eliminates manual setup, allowing single-command deployment with pay-per-use billing.

Benefits of Fovus

- Free Automated Benchmarking: Evaluates how different instance choices and system configurations (CPUs, GPUs, memory, and more) impact the runtime and cost of MegaDock runs.

- AI-driven Strategy Optimization: Automatically determines optimal instance choices and configurations, ensuring efficient and cost-effective computation.

- Dynamic Multi-Cloud-Region Auto-Scaling: Allocates optimal GPUs across multiple cloud regions and availability zones, efficiently scaling up GPU clusters for large-batch simulations.

- Intelligent Spot Instance Utilization: Intelligently utilizes spot instances based on availability and pricing dynamics, with spot-to-spot failover capabilities to minimize computation costs.

- Continuous Improvement: Automatically updates benchmarking data and refines HPC strategies as cloud infrastructure evolves.

- Serverless HPC Model: Eliminates manual setup through AI-driven automation, allowing for single-command or few-click deployment, with users paying only for runtime.

Optimizing MegaDock on Fovus

MegaDock Two-Stage Workflow Optimization Strategy

MegaDock operates in two distinct computational stages:

- Docking Stage (GPU-Optimized): Evaluates potential binding models between receptor and ligand molecules using FFT, benefiting from GPU acceleration.

- Decoy Generation and Output (CPU-Optimized): Generates decoy PDB files based on docking results, concatenating them with receptor structures to create docked complex files. This stage runs on CPUs and typically involves transferring large output data volumes to a storage endpoint.

Given the substantial output size—over 1 TB for 2 million decoy files per 1,000 protein-protein dockings (2000 docking poses per protein pair)—efficient data handling is critical. Running both stages sequentially on GPU instances would be inefficient, as the CPU-bound decoy generation and lengthy output data transfer would waste expensive GPU resources. To optimize for performance and cost, Fovus separates these stages, ensuring that GPU instances focus solely on docking while decoy generation and output data transfer run in parallel on CPU instances.

How Fovus Optimizes MegaDock Execution

To improve efficiency, it is recommended to package enough docking runs (e.g., 1000 protein-protein docking simulations) into a single task (running in series), making runtime per task between 1 and 2 hours. This diminishes the overhead in the initial instance and software environment setup and makes it easy to leverage spot instances. Similarly, dividing the decoy generation and output data transfer for each docking task into 10 parallel CPU tasks is recommended, reducing data transfer time.

Fovus benchmarks and optimizes MegaDock execution using two separate benchmarking profiles:

- Docking Stage Profile: This profile helps identify the optimal GPU choice and system configurations for the packaged docking stage execution.

- Decoy Generation and Output Profile: This profile helps determine the optimal CPU choice, memory configuration, and parallel computing settings (number of parallel processes) for executing the decoy generation and output data transfer stage.

Fovus benchmarks each stage separately and auto-determines each stage’s optimal HPC strategy (GPU/CPU choices, system configurations, and parallel computing settings). By dynamically optimizing cloud operations and resource utilization in both stages, Fovus ensures computational efficiency and time-cost optimality, maximizing the utilization of powerful GPU resources.

Fovus intelligently leverages the most cost-effective spot GPUs across multiple cloud regions and availability zones in real-time, considering MegaDock benchmarking data, the interruption probability, and spot instances’ availability and pricing dynamics to minimize costs while maximizing performance. If a spot instance is interrupted, Fovus automatically and seamlessly re-queues the task, transferring all existing data to a new optimal spot GPU instance and resuming computation from the last docking (by checking if docking outputs already exist and skipping completed ones). Additionally, by dynamically allocating spot GPUs across multiple cloud regions and availability zones, Fovus maximizes GPU availability and scalability, minimizing time to insight for high-throughput virtual screening.

Experimental Setup

To evaluate the performance of MegaDock on Fovus, we conducted docking simulations using 1,000 pairs of randomly selected protein structures (antibodies and antigens) from the RCSB Protein Data Bank. We generated 2,000 docking poses for each protein pair, resulting in 2 million decoy structures.

To best leverage spot GPUs and further enhance efficiency, the run script is designed to detect existing docking outputs and skip a docking run if its output already exists. This effectively creates the “checkpoint” upon completing each docking run. It ensures that an interrupted workload can leverage Fovus’ spot-to-spot failover capability to resume computation from exactly where it left off, preventing wasted compute time and enabling smooth, cost-effective execution on spot GPUs.

Each docking run was structured as follows. The docking stage was executed using:

tail -n +3 "/data/task.table" | while IFS=$'\t' read -r receptor ligand output_file; do

if [[ -f "$output_file" && -s "$output_file" ]]; then

echo "Skipping completed: $output_file"

else

megadock-gpu -R "$receptor" -L "$ligand" -o "$output_file"

fi

doneFor decoy generation:

tail -n +$START_INDEX "$TASK_TABLE" | head -n 100 | while IFS=$'\t' read -r receptor ligand output_file; do

[[ -z "$receptor" || -z "$ligand" || -z "$output_file" ]] && continue

pdb_id=$(basename "$output_file" | sed 's/\.[^.]*$//')

lig_path="./lig/${pdb_id}"

decoy_path="./decoy/${pdb_id}"

mkdir -p "$lig_path" "$decoy_path"

for i in `seq 1 2000`; do

decoy_file_path="${decoy_path}/decoy.${i}.pdb"

if [ -f "$decoy_file_path" ]; then

# Skip completed docked complexes

continue

fi

decoygen "${lig_path}/lig.${i}.pdb" "$ligand" "$output_file" $i

cat "$receptor" "${lig_path}/lig.${i}.pdb" > "${decoy_file_path}"

done

doneIn high-throughput virtual screening, the large batch of docking simulations is highly parallelizable, allowing for a significant reduction in time-to-insight through massive parallelization. As a result, minimizing the runtime of individual simulations is less critical, making cost efficiency the top priority. For this study, the docking job was submitted to Fovus with the objective of cost minimization, prioritizing affordability over speed. Fovus automatically selected the optimal HPC strategy to minimize cloud costs while ensuring scalability and performance, delivering the most cost-effective solution without compromising computational efficiency.

Key performance metrics analyzed included:

- GPU-accelerated docking runtime and cost

- Decoy generation runtime and cost

- Data transfer runtime and cost

- Total runtime and cost

- Total runtime and cost without data transfer

Case Study Results

| Stage | Runtime (minutes) | Cost |

|---|---|---|

| 1. GPU-Accelerated Docking | 97 | $2.92 |

| 2. Decoy Generation | 37 | $0.17 |

| 3. Data Transfer | 39 | $0.21 |

| Total | 173 | $3.30 |

| Total without Data Transfer | 134 | $3.09 |

The optimized workflow demonstrated that 1,000 protein-protein docking simulations could be completed in just over two hours at a cost of just $3.09 for docking and decoy generation. Given a thousand tasks (1,000 protein-protein docking per task) can be easily distributed on Fovus to run in parallel on 1,000 spot GPUs across multiple cloud regions and availability zones, these results suggest that performing a high-throughput virtual screening with 1 million protein-protein dockings with MegaDock on Fovus would cost approximately $3,087 and take only 2.2 hours overall, thanks to massive parallelization. The AI-optimized spot instance strategy significantly reduces compute costs compared to on-demand pricing models. Making the workloads resumable by creating docking checkpoints ensures minimal loss during spot instance interruptions, and the two-stage workflow optimization strategy improves overall efficiency and mitigates data transfer bottlenecks.

These results highlight how Fovus’s AI-driven strategy enables efficient, cost-effective execution of MegaDock at scale. With AI-optimized HPC strategy, dynamic multi-cloud-region auto-scaling, and intelligent spot instance utilization, Fovus reduces the time-to-insight of protein-to-protein docking at the millions scale from days to hours, making high-throughput virtual screening using MegaDock on cloud GPUs easily accessible and more affordable.

Conclusion

Running MegaDock on Fovus significantly improves cost, efficiency, and scalability. By leveraging AI-driven HPC strategies, MegaDock’s docking workflow is optimized to run at scale without the typical inefficiencies associated with cloud-GPU-based docking. Automated resource selection ensures time- and cost-effective computing, two-stage workflow optimization maximizes GPU utilization, and multi-cloud-region scaling enables rapid large-scale docking. Spot instance utilization significantly reduces costs, while spot-to-spot failover capability ensures result integrity and efficiency for resumable workloads.

As cloud infrastructure continues to evolve, Fovus auto-upgrades the HPC strategy for MegaDock to continuously improve performance and cost by leveraging infrastructure upgrades in a timely manner, ensuring that computational strategies remain efficient, delivering sustained performance and cost benefits, and helping researchers and organizations achieve faster insights and stay ahead of the curve.

For organizations looking to scale protein-protein docking simulations, Fovus provides an intelligent, serverless HPC solution that makes large-scale docking cost-effective and highly efficient. Whether for drug discovery, molecular research, or computational biology, Fovus ensures MegaDock runs at peak performance with minimal cost.

Interested in optimizing your HPC workloads? Try Fovus today for free and experience the future of AI-driven cloud supercomputing for MegaDock and beyond.